I am currently working on a project to implement an enterprise search solution for a large organization. This initiative was inspired by an employee survey that highlighted a recurring challenge: support engineers often struggle to locate information spread across various enterprise tools, spending hours to resolve a single issue. Employees expressed a clear need for seamless access to the resources they require to perform their roles effectively, without the inefficiency of switching between multiple systems.

Enterprise search solutions address this challenge by connecting to a company’s data sources—such as Jira, Confluence, SharePoint, Salesforce, Slack, and more—and indexing the data to make it easily accessible through a chatbot-like interface. The overarching goal is to create a centralized platform that simplifies information retrieval, enabling support engineers to work more efficiently.

At this stage, we are still in the demonstration phase, and one notable challenge we’ve encountered is the issue of hallucinations. While the system presents information in a format that appears accurate—often including links to sources—a deeper analysis sometimes reveals inaccuracies in the provided answers. Experienced support engineers can typically recognize and correct these errors, but less seasoned team members might unintentionally share incorrect information with customers, potentially leading to a poor customer experience.

Although these tools have significant potential for refinement and quality improvement, we are not yet at that stage of the project. For now, I remain cautious. In my view, providing a well-researched and accurate response, even if it takes an hour, is far better for customer satisfaction than delivering a quicker, lower-quality answer in just 15 minutes.

This inspired the researching and creation of this post, as I wanted to share my observations and insights on the challenges of hallucinations.

AI hallucinations have become a significant challenge. These occur when AI systems, especially large language models, generate information that’s inaccurate yet convincingly presented. This situation raises concerns about AI output accuracy, which can worsen misinformation issues. It risks public trust and emphasizes the urgent need for better data handling and validation to boost AI reliability.

Key Takeaways

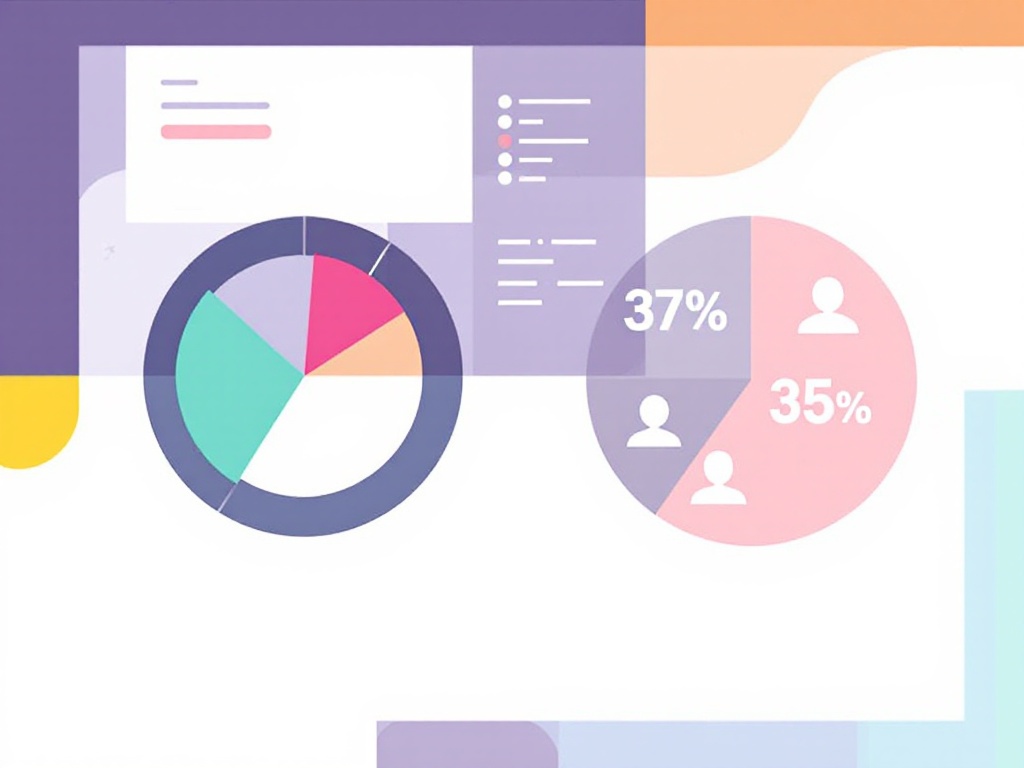

- AI hallucinations affect many users, with 77% acknowledging they’ve been misled by AI-generated content.

- These errors often result from flawed or outdated training data and complex model structures, leading to significant inaccuracies.

- Misinformation from AI can have severe consequences in crucial sectors like healthcare and finance, potentially leading to misguided decisions and risking public safety.

- AI’s inconsistent accuracy challenges user confidence, as different models often show varied performance levels.

- Strategies such as retrieval-augmented generation and the creation of AI guardrails are crucial for reducing hallucinations and ensuring correct AI outputs.

AI Hallucinations: Definition and Prevalence

AI hallucinations occur when artificial intelligence systems, particularly large language models, produce content that’s not grounded in facts but still delivered with a sense of authority. This misrepresentation can mislead users, providing misinformation disguised as truth.

A significant percentage, around 96%, of internet users are aware of AI hallucinations. These users recognize the phenomenon but aren’t always sure how to handle it. It becomes particularly problematic when 86% of individuals report having encountered such hallucinations firsthand. The ramifications of these encounters are far-reaching, with nearly 77% of users admitting they’ve been misled by these inaccuracies. This isn’t just a trivial concern, as being misled by unsubstantiated information can lead to wrong decisions and a general erosion of trust.

The stakes become higher considering the fact that 93% of users believe AI hallucinations can be harmful. This sentiment underscores the potentially damaging effects on user perception and actions. Misleading information undermines the value of AI technology and raises ethical questions about its deployment and use.

As AI continues to integrate more into daily life, understanding AI hallucinations becomes crucial. Recognizing the prevalence and potential consequences allows users to engage with AI systems more critically and discerningly. By raising awareness and emphasizing accuracy, the goal is to mitigate the negative impacts while utilizing AI for its intended benefits.

Understanding the Causes of AI Hallucinations

AI hallucinations often spring from the training data’s inherent flaws. Training data containing biases or errors, or that simply becomes outdated, can cause AI to generate unreliable information. This is particularly evident in rapidly changing fields like technology and current affairs.

Additionally, limited data access and complex model structures contribute to these inaccuracies. Privacy laws often restrict data availability, limiting the AI’s breadth of learning, and high model complexity can muddle how the AI understands and processes information.

Furthermore, if AI systems absorb these data faults, they replicate and amplify erroneous or biased outputs. This cycle leads to responses that are not just incorrect but potentially hazardous. It’s crucial to ensure the AI’s foundation is strong to prevent ongoing issues related to these hallucinations.

Consequences of Misinformation Generated by AI

AI’s propensity to produce inaccurate information, known as hallucinations, brings several risks that should not be underestimated. One significant threat lies in privacy and security, where hallucinations can leak confidential data or fabricate sensitive details, comprising 60% of the potential risks. This is not just a concern for individuals but poses a widespread threat to businesses and governments.

Bias and inequality are also perpetuated by AI, counting for 46% of issues stemming from AI misinformation. Algorithms might inadvertently favor one group over another, deepening existing social divides. This bias can skew public perception and decision-making processes, especially when AI outputs are trusted without verification.

In sectors like healthcare and finance, accuracy is non-negotiable. Hallucinations can lead to dangerous health-related hazards, accounting for 44% of potential risks, including promoting incorrect medical advice. This can escalate quickly, resulting in incorrect treatments or misdiagnosing conditions. Financial misinformation could similarly wreak havoc, affecting market stability or personal investments.

Elections and public opinion aren’t immune to AI’s influence. AI-generated misinformation could manipulate societal beliefs and behaviors, swaying elections or eroding public trust in democratic institutions.

Consider these potential pitfalls:

- Healthcare: Inaccurate AI recommendations could endanger patient lives.

- Finance: Misleading financial data can lead to disastrous economic consequences.

- Elections: Misinformation might alter election outcomes and political landscapes.

AI should be harnessed carefully to mitigate these threats, ensuring information accuracy remains a priority.

Assessing AI Accuracy and Reliability

Users have a strong trust in AI, with 72% expressing confidence in its capabilities. However, 75% of users have encountered misleading information from AI at least once. This gap between perceived reliability and actual accuracy is significant. A recent study compared different AI models to evaluate their fact-checking accuracy. Originality.ai stood out with a top accuracy rate of 72.3%. On the other hand, GPT-4 had an accuracy rate of 64.9% but also recorded the highest unknown response rate at 34.2%. This indicates a concerning inconsistency in reliable outputs.

When considering AI for information verification, it’s important to recognize these variances. While Originality.ai may be more dependable in fact-checking, the high unknown rate for GPT-4 suggests there might be limitations in its ability to provide accurate responses. These discrepancies must be accounted for in decision-making processes involving AI-generated data.

Mitigation Strategies for Reducing AI Hallucinations

One effective approach to address AI hallucinations involves retrieval augmented generation. By incorporating verified details from reputable sources, this strategy ensures AI responses remain grounded in fact. Statistical patterns aren’t enough; systems must tap into trusted information reservoirs to provide reliable answers.

Causal AI offers another layer of accuracy by analyzing various scenarios before generating a response. It assesses data contextually, which helps in minimizing errors and improving precision. By understanding the cause-and-effect dynamics, AI can provide nuanced and factual responses.

Establishing AI guardrails is also crucial. A set of predefined rules helps prevent the generation of misleading or baseless content. By implementing these checks, AI becomes more reliable, enhancing trust with users. Systems can better respond accurately when their output is consistently monitored and corrected. These strategies collectively fortify AI systems against the pitfalls of hallucinations, ensuring dependable and informative interactions.

Real-World Implications of AI Hallucinations

AI hallucinations can lead to significant consequences in many sectors. Google’s Bard chatbot falsely claimed that the James Webb Space Telescope captured the first images of an exoplanet. This example underscores the risk of misinformation spread by AI tools expected to be reliable. Similarly, Meta pulled back its Galactica LLM demo due to errors, highlighting how AI inaccuracies can damage credibility and trust in technological solutions.

The implications extend far beyond misinformation. In critical fields like healthcare, a hallucinating AI model can have severe repercussions. Consider a healthcare model that inaccurately identifies a benign skin lesion as malignant. Such a misidentification could lead to unnecessary biopsies, anxiety, and even unwarranted treatments. The potential for these errors underscores a crucial point: AI outputs must be accurate and trustworthy.

These examples emphasize the need for vigilant checks and ongoing refinement of AI systems to mitigate risks. For developers and users, implementing robust systems to validate AI outputs is critical. Transparency in AI decision-making processes and continuous feedback loops can contribute to improving the overall reliability of these models.